The Pandemic is Driving Cloud Computing/Data Center Revenue Sky High

Whether it’s working from home, meeting colleagues via Zoom, shopping for goods and services online, taking part in online classes, or playing a video game in the living room, the coronavirus pandemic has changed how we work, collaborate, shop, study, and play.

Given that most of these revolve around online activities, what’s been the effect on cloud computing and the data center market?

The short answer to the question is that services powered by data centers are booming, particularly among the big players that dominate the cloud computing and data center landscape.

As the pandemic set in, the leader in cloud computing operations, Amazon Web Services (AWS), set a new quarterly revenue record in early 2020 of $10.22 billion (up 33% from the previous year) – representing over 75% of Amazon’s overall operating income during the quarter.

The revenue for Microsoft’s online services (which includes its Azure cloud computing platform, Office 365, and other online services) was up 39% to $13.3 billion. (The company reported Azure revenue was up nearly 60%, but no dollar figure was broken out.)

Google Cloud reported its revenue reached $2.8 billion (up 52%) during the same timeframe; this figure includes revenue from its online SaaS G Suite software tools. Facebook, which, like Google, builds its own enterprise data centers to power their sprawling online services, reported revenues of $17.7 billion during the same quarter, up 18% year over year.

Data Center Best Practices: How to Design a Data Facility from a Network Engineer’s Perspective

Over the last 20+ years, modern data center facilities have evolved from their roots hosting Internet Service Providers and co-located corporate servers into the highly sophisticated enterprise-class data operators of today, responsible for powering a range of mission-critical online services in the cloud, including: remote power-by-the-hour computing, on-demand digital storage solutions, carrier hotels (the modern evolution of server co-location), as well as software-as-a-service (SaaS), network-as-a-service (NaaS) and platform-as-a-service (PaaS) offerings.

During this timeframe, data center operations have converged on what we might call a reference design that solves a series of fairly rigid design constraints. In the following section, we’ll touch on some of the “best practices” that have evolved to satisfy these requirements.

Of course, things are changing all the time, so we’ll also follow up with a look at some of the emerging issues (and technological solutions to solve them) that are driving next-generation data center design.

Service Tiers

Our first stop in the survey of best practices that govern data center design is to identify the desired service tier level.

Service tiers represent the level of redundancy built into a data center design, which, in turn, forms the basis of the guarantee of service reliability promised to the end customer.

Service availability is often represented in customer service agreements by an uptime performance guarantee, ranging from a lower figure, such as 96% uptime, to a more robust 99.99% uptime or better, depending on the provider.

Tier I data centers offer the least amount of redundancy and could be knocked offline by a single point of failure. At the other end of the spectrum, engineers build in multiple redundant features into Tier 4 data center networks so that a single mode of failure, such as a server failing, or a hard disk crashing – or even an entire data center going offline – won’t interrupt service to the end customer.

Next, let’s look at a typical data center from the inside out, starting with the server.

Vertical Rack Designs Minimize Footprint

Vertical racks have become a de facto standard in data center server farms for decades. Vertical rack systems allow data engineers to populate the rack system with individual server motherboards (known as blades) which slide into the rack like a drawer, allowing them to connect to common services such as power supply, networking cables, etc. if a server goes down or needs preplanned maintenance, it can be removed quickly, and another can be snapped into its place.

Open Source Software and Virtualization Reduces Cost

Open source software solutions, such as the famous LAMP stack (which stands for Linux, Apache, MySQL, PHP), dramatically reduce the cost of provisioning multiple servers in a data center. (Today, of course, there are more options, including NGINX for the webserver or python as an interpretive language, as well as so-called “Cloud-Native” designs that use pure cloud-based software tools, such as those from Amazon’s AWS, Rackspace Cloud, or Microsoft Azure.)

But the biggest cost-saving innovation from a data center perspective was the introduction of virtualization software (also known as a hypervisor), which allows data engineers to operate multiple virtual server sessions on a single server blade. Depending upon the power of the computer’s CPU, memory capacity, and storage, it may be possible to operate half a dozen or more virtual servers on one single server – without making any additional server hardware investments.

Keep the Temperature under Control

Just one vertical rack kitted out with multiple servers can generate a lot of heat.

The problem gets worse the more racks you have, and in a modern data center, the main machine rooms can have hundreds of racks, lined up neatly in separate aisles.

Data center HVAC specialists pay close attention to creating efficient ductwork to keep the valuable computer equipment cool; options include installing plenums either overhead above the drop ceiling or taking advantage of the raised floor (typically used in computer rooms) to run ductwork. Another common approach is to create so-called “cold aisles” using glass door cabinetry which, much like a glass freezer case, concentrates the cold air flowing through the computer racks toward either a floor or ceiling mounted return air vent.

Given that the energy cost to operate a data center can exceed the cost of the hardware within it, engineers are constantly looking for ways to increase cooling efficiency (more on some of the newest technological ideas later…). Currently available energy-saving systems include the use of heat pumps or even innovative liquid-cooled systems that extract built-up heat from electronic components directly.

Fire Suppression, Flood Control, and Geological Risks

The sheer volume of electronic equipment and power supplies concentrated in a relatively small area increases the potential for fire inside a data center.

Smoke detectors and heat sensors are a must.

Traditional fire suppression systems that rely on water sprinklers or water misters are one option; however, they will almost certainly damage electronic equipment, which is why many data centers rely on inert gas systems to extinguish fires equipment.

These systems displace the action in the room to extinguish fires; however, they do carry their own risks for human health; commonly used fire suppression gases, such as Halon, are very hazardous to breathe, and the lack of oxygen can lead to asphyxiation.

Engineers must also assess the risk from water intrusion due to potential roof leaks (such as during severe storms) or flooding events. Raised platforms in the machine room can help to some degree with ground flooding, but flood risks must be assessed carefully before selecting a facility location.

Other considerations which come into play when choosing a location are risks due to environmental and geological conditions, such as identifying whether the location is a seismically active area or likely to be hit by a hurricane or tornado, or wildfire.

Fail Safe Replication and Backup Power

Data centers offering a high level of uptime guarantee (particularly the highest Tier 4 facilities) must provision multiple backup systems so that failed equipment, from servers to storage systems, to network access points, can failover to a redundant system waiting in the wings.

In the case of Tier 4 data centers, this could include switching over to an entirely separate data center on the network to prevent any potential customer downtime.

Backup power systems are an important part of keeping data centers online; a common configuration is to provision a large bank of emergency batteries configured to supply power the instant the main supply goes offline. This bank of batteries can be used until backup power supplies can be brought online, such as diesel generators, which typically require a minute or more to fire up before they begin producing backup electrical power.

Keeping the Network Safe from Cyber Attack

Network security at a data center is often one of the main selling points that convince corporate IT departments to implement a cloud-based solution over an in-house implementation.

The thinking goes that data centers, particularly those with high service tier guarantees, should have more expertise and be better placed to respond around-the-clock to a potential cyber intrusion.

The better data center operators are constantly on the lookout for ways to reduce the risk due to cyber-attacks by hardening their network equipment, keeping their operating system and software patches up-to-date, as well as running drills to prepare for a potential cyber-attack, and how to maintain business continuity in the aftermath.

In some cases, data center operators find it advantageous to partner with security specialists; one example is Cloudflare, which came to prominence in the market by helping protect companies from denial-of-service (DoS) attacks.

Data Center Physical Security

As you may have noted, we started our discussion with the configuration of server racks and have moved steadily outward, touching on the machine room, heating and cooling system, and secure network access.

Now it’s time to touch on best practices for creating a secure facility and exterior security perimeter.

Unfortunately, people are a risk to a data center, which is why most facilities employ a rigorous security system to only allow a select number of trusted individuals access to the computer networking hardware. Advanced biometric scanning tools (such as retina scanners) are now commonly used in data centers to verify the identity of individuals.

Despite all best efforts, including background checks, personnel can be responsible for damage, whether it’s from malicious intent or an accidental oversight. In response, data centers are looking for ways to automate their machine room installations as much as possible to reduce the need for human intervention. At the extreme level, so-called “lights out” facilities use advanced automation and remote access to exclude human workers from accessing the inner workings of the data center as much as possible.

As we all know, data is valuable. Many data centers also employ private security services and varying degrees of perimeter walls and gateways to vent outsiders from gaining access to the property. (Amazon even uses explosion proof containers to hand-deliver data to AWC facilities.)

| How can you learn the data center business? As data center technology has grown and matured, new degree and certification programs have come along to prepare the next generation of data center management and network engineers. Among the pioneering programs is a bachelor’s degree in Data Centre Facilities Engineering offered by the Institute of Technology in Sligo (Ireland) at their branch campus in Mons, Belgium, and a master’s degree in Datacenter Systems Engineering offered by the Lyle School of Engineering at SMU in Dallas. |

Next Generation Data Centers: High Power Consumption is the Achilles Heel of Data Centers

Earlier this decade, researchers studying computing trends came up with a very disturbing prediction: that if current trends continued, computer networking and electronic devices would consume 100% of our available electricity by the year 2040.

As was pointed out earlier, data center computers do use quite a bit of energy and give it off as unwanted heat. (Here’s an explanation of why from Scientific American.)

The big data center operators have responded. Companies such as Google and Microsoft have committed to becoming net zero carbon contributors in the next decade or so (Microsoft claims it will eventually become a net negative carbon emitter).

How is this possible?

In the short term, computers are becoming more energy efficient, and data centers, always concerned with operating costs, are selecting those that are the most energy efficient at the expense of peak performance. Companies are also pursuing the use of renewable energy sources, such as hydroelectric or wind and solar power.

As a result, efficiency has increased across the data center industry; in fact, data centers may be part of the solution in so far as they can be much more efficient than the alternative of in-house corporate IT departments, which may not have the ability to turn to advanced cooling systems, or sustainable power sources, and so forth.

Widespread Shift to New Technologies (such as Artificial Intelligence) Could Drive Up Data Center Energy Use Even Higher

However, we are not out of the woods yet.

What new computing technologies giveth, they also taketh away.

Take, for example, the wildly popular graphics accelerator (GFX) cards from Nvidia and AMD, which power up exceptionally realistic video games played on consoles and PC computers.

These same GFX cards are also used by bitcoin minors seeking to cash in on the rising value of virtual currency.

And unfortunately, from an energy use perspective, GFX cards are also now widely used to power AI and machine learning implementations (think Tensorflow from Google) as well.

This is a very consequential development for the data center industry because A) GFX cards are very energy intensive to operate, and B) machine learning-based AI applications are a huge growth opportunity for data centers operating massive servers kitted out with multiple GFX cards.

And the increased projected demand for AI and machine learning computing is not the only new development that could drive demand (and hence energy usage) sky-high. Here are a few others waiting in the wings:

- Quantum computing, if it ever comes to the market, depends upon very cool temperatures to operate, which will increase the demands on data center energy use.

- 5G networks powering a range of technology advances, from Industry 4.0 to self-driving vehicles, will dramatically increase the demand for data center-based computing.

- New near Earth satellite systems, such as Elon Musk’s StarLink, could drive demand for cloud-based services in currently underserved rural and remote areas.

- And looking further into the future, Mojo Vision’s augmented reality contact lenses or Elon Musk’s prototype neuralink man-machine systems could make cyborg-style computing a mainstream technology sometime in the future, which could also significantly increase data center energy demand

So the question is: will new computing technologies, such as artificial intelligence, help us manage energy consumption in an intelligent way for a more sustainable planet, or will it help us, in the words of Wired magazine, burn the planet to the ground?

What’s the Future of Next Generation Data Centers?

So it’s back to the drawing board for data center engineers who need to look for even more advanced next generation solutions to reign in energy use.

Fortunately, there are some promising ideas that are being evaluated now.

Microsoft’s Project Natick

Locating data centers near bodies of water, which can cool hot air extracted by the HVAC systems, has been used in many high-profile sustainable building designs. However, Microsoft’s Project Natick takes this one step further. They have constructed a submersible data center module that can be immersed completely underwater and cooled by the ocean.

Light-based Computing

Researchers at the University of North Carolina at Chapel Hill are exploring ways to use light, rather than electricity, to transmit data within computer systems; this approach should reduce energy consumption and require less cooling. Optical networking is a widely adopted technology at larger scales, but researchers are trying to discover techniques to apply data handling principles at the micro-level as well.

MIT’s Magnetic Wave Computing Research

MIT researchers are looking at a different approach to replace electricity in computing by using magnetic waves to transmit data. Like the light-based computer research at UNC Chapel Hill, the MIT researchers are exploring ways to create nanometer-sized communication channels (and barriers) that will not only reduce energy use but also help fulfill Moore’s law of doubling computing power every 10 years.

Long Term Data Storage in Glass

Current storage methods for archiving big data sets are not a long term solution, as hard drives and even SSD storage devices deteriorate over time, not to mention the energy cost to operate them. Microsoft’s Project Silica uses glass to store data, and their researchers expect the medium to retain data for up to 10,000 years.

Long Term Data Storage in Artificial DNA

Glass is not the only material that researchers are exploring for data storage. Molecular Biologist Nick Goldman and research at the European Bioinformatics Institute have created a proof of concept based on artificial DNA. According to Goldman, “all the information in the world could be encoded and stored in DNA, and it would fit in the back of an SUV.”

Hydrogen Fuel Cells for Data Center Backup Power

Diesel generators used as emergency backup sources of electricity are an expensive solution for data centers seeking power backup solutions. Microsoft is developing a more energy-friendly alternative, Hydrogen Fuel Cells, which can operate at a lower cost of maintenance than diesel power plant alternatives.

Formaspace is Your Data Center Partner

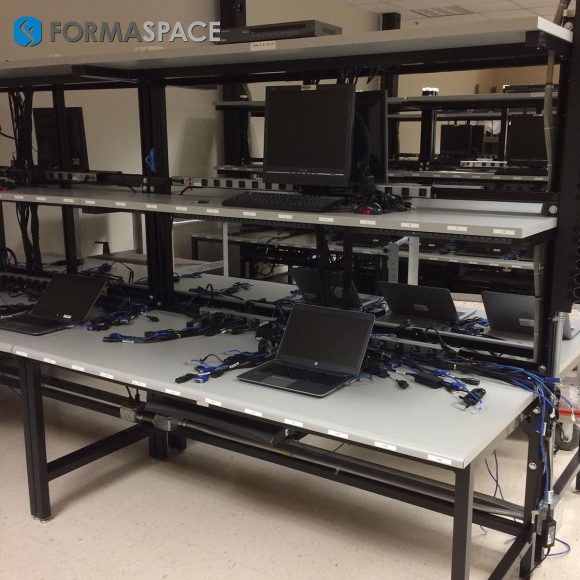

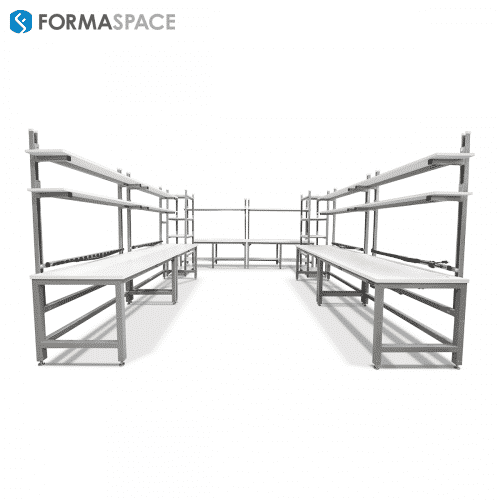

If you can imagine it, we can build it – here at our Austin, Texas factory headquarters.

Formaspace has the skills and expertise to build custom industrial furniture solutions for your unique needs, including fabricating specialized workbench, material handling, and storage solutions for data centers, IT labs, and more.

Find out how we can work together.

Contact your Formaspace Design Consultant today.